Product area

— AI-driven collaboration platform

— Generative AI enablement tool

— Natural language processing

Team composition

— Lead product designer (me)

— Product manager

— Technical lead

— Full-stack developers (3)

— AI/ML engineer (2)

- — QA engineer

My contribution

— User research and analysis

— Product discovery and strategy

— IA and workflow optimisation

— Prototyping and testing

- — Visual and interaction design

Duration

- Mar, 2024 — Oct, 2024 (8 months)

Introduction

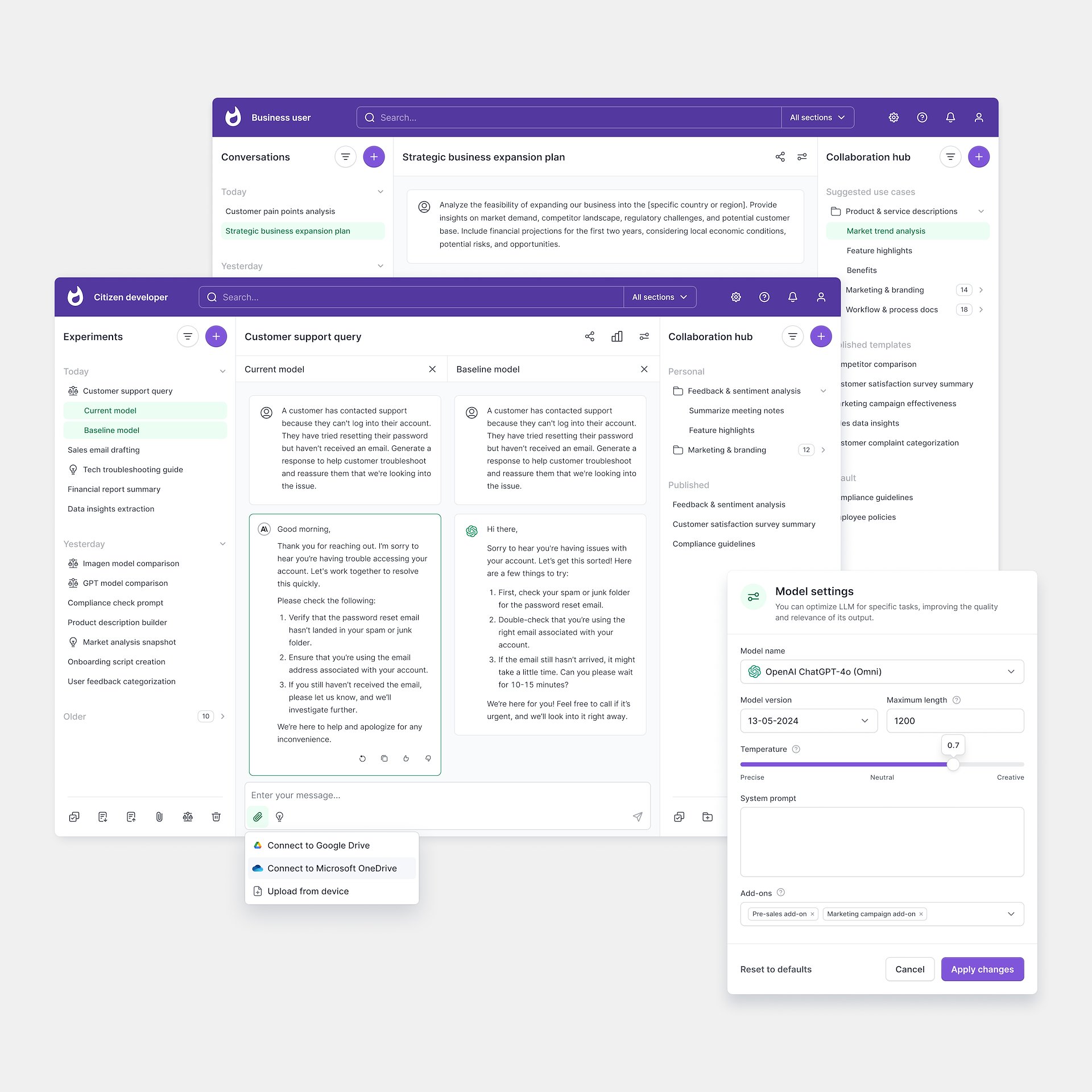

Working with Generative AI in large companies is often confusing. People come in with different levels of knowledge and struggle to work together. This leads to delays, duplicate work, and unclear outcomes. To solve this, we created a web platform where teams can build, test, and share AI use cases in one place. It became an important step in the company’s plan to bring AI tools into its products and help businesses use AI more effectively.

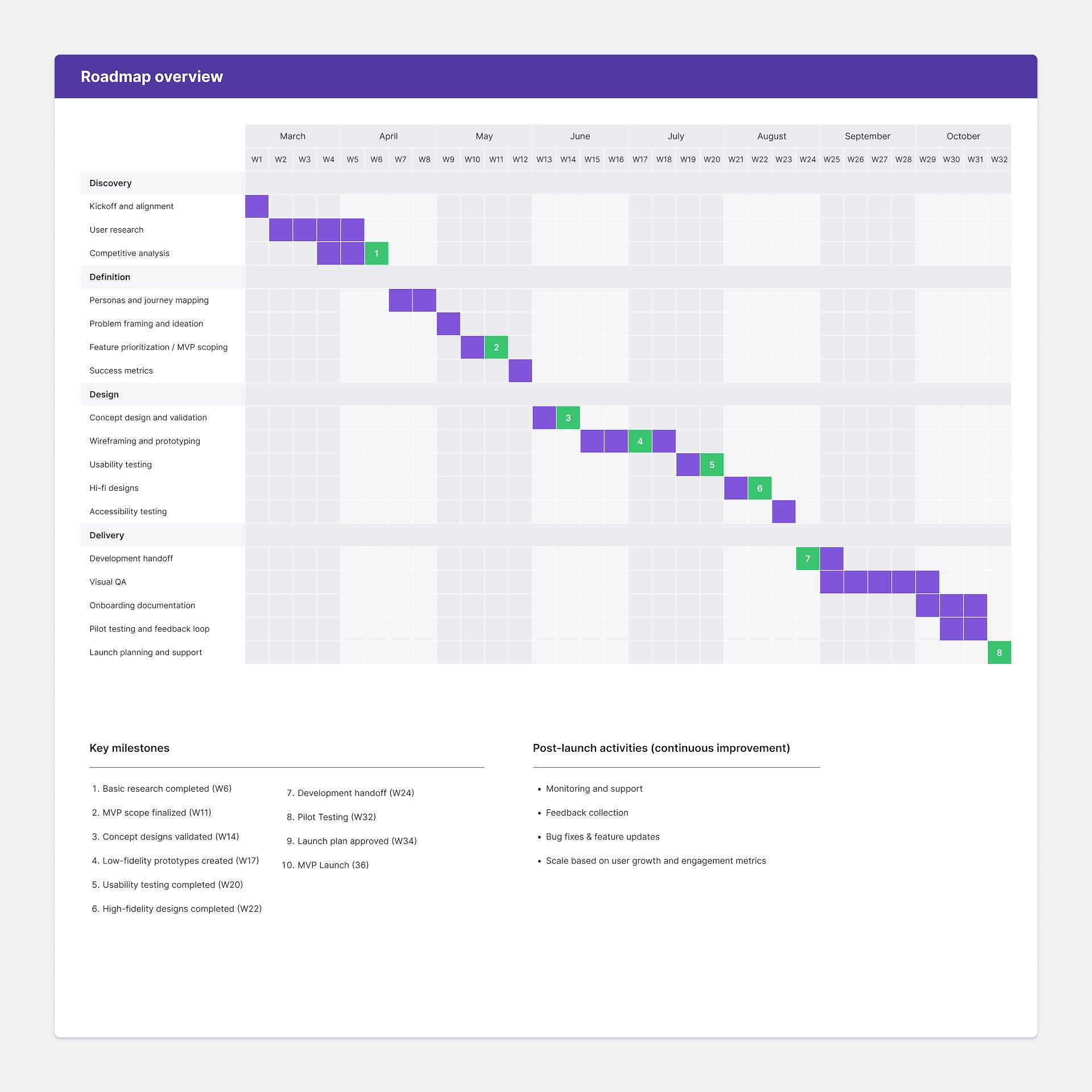

The design process had four stages: Discovery, Definition, Design, and Delivery. I was responsible for leading the design through all of them.

Discovery

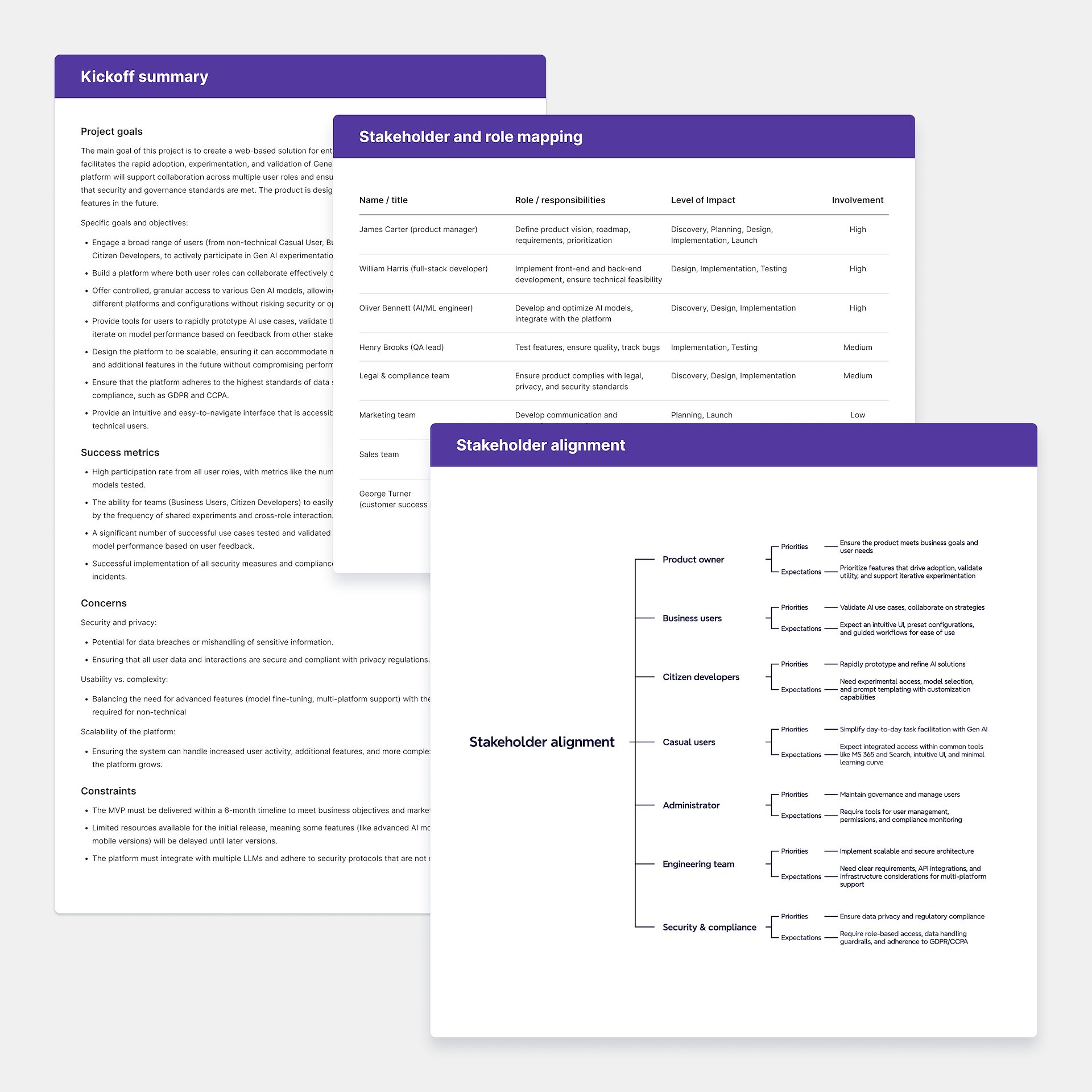

We began with a kickoff session to align on the vision, goals, and limits of the project. The main purpose of the platform was to help organizations adopt and test AI use cases through clear and collaborative workflows. Goals included better cross-role collaboration, reusable prompts, and simpler model comparisons for both technical and non-technical users. We also set early success measures, like cutting down the time needed to validate AI use cases and seeing higher adoption among business roles.

I mapped the key stakeholders, focusing on their roles, responsibilities, and influence. I then held individual conversations to learn their expectations, priorities, and concerns. This helped uncover areas of misalignment and build a common understanding across the group. It gave us a clear direction for moving forward together.

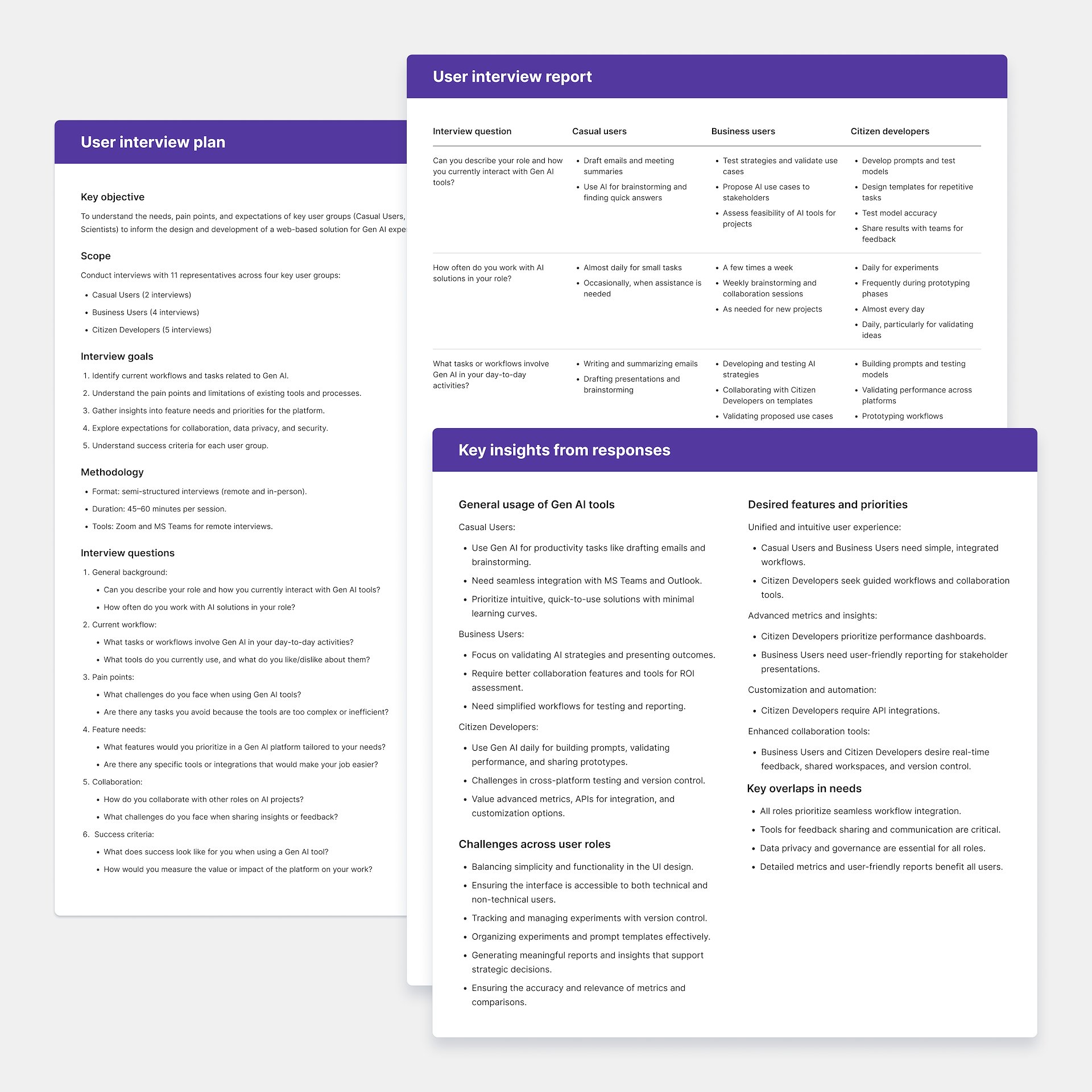

To guide research, I built a roadmap that showed methods, timelines, and how insights would shape design and development. It included competitor reviews, interviews, and user research, as well as milestones for sharing findings internally. Having this roadmap early helped set expectations and gave the work a steady rhythm.

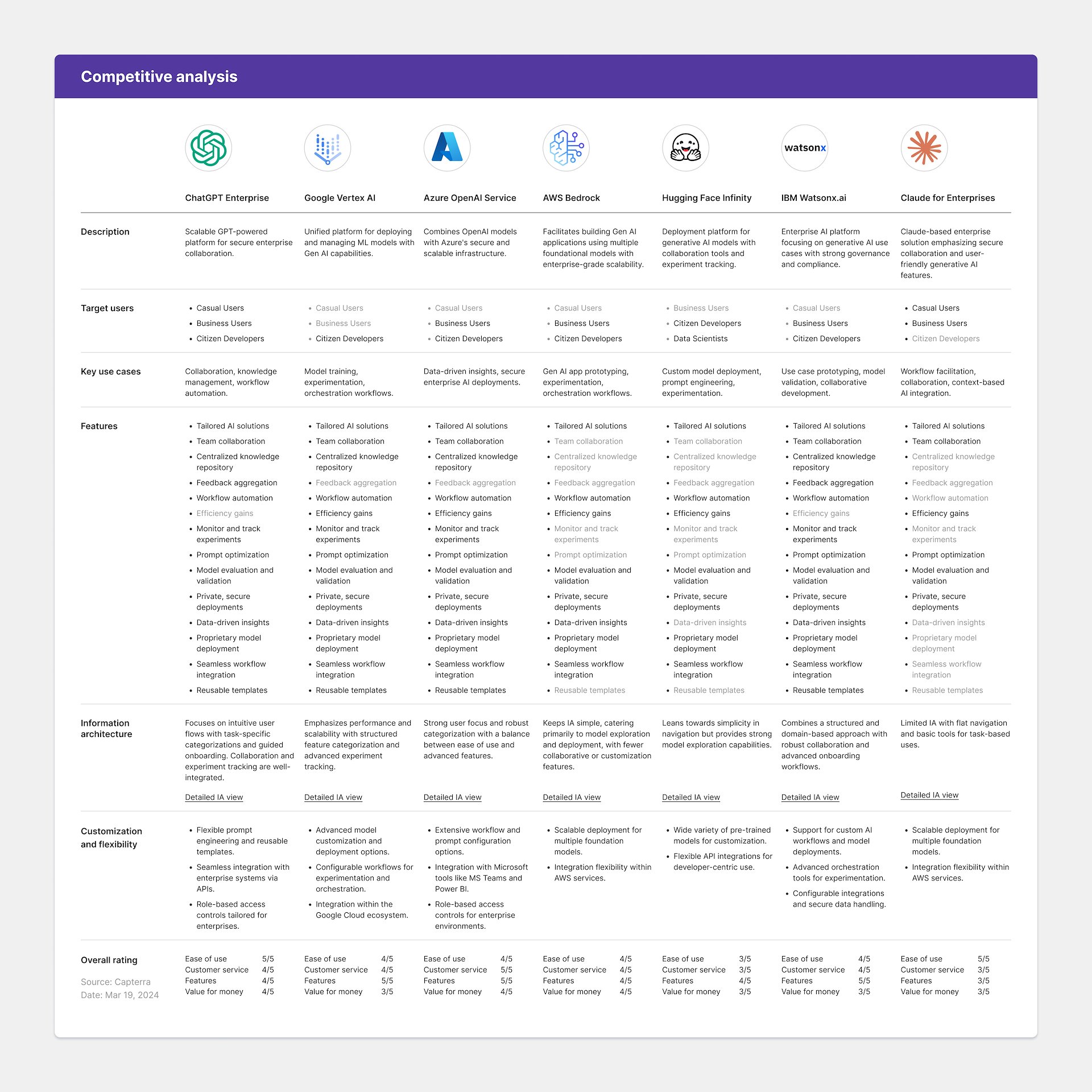

As part of this, I ran a comparative review of existing tools. The focus was on platforms offering features for prompt engineering, model testing, and AI collaboration. We looked at prompt management, multi-model support, onboarding for less technical users, and collaborative workflows.

The review showed that most tools were either too technical or lacked clear workflows. Many assumed users already had deep AI knowledge. Few offered role-based access, reusable templates, or structured validation. These gaps highlighted the chance to design something more flexible and accessible to a wider range of people.

We then carried out interviews with three main user types: Casual Users, Business Users, and Citizen Developers. The sessions looked at how people currently used AI tools, their workflows, and where they faced challenges.

Findings were collected and turned into clear insights. Common themes included difficulty writing prompts for unfamiliar tasks, a lack of structure in collaboration, and a need for templates and shared examples. People also wanted more transparency when comparing outputs from different models.

Definition

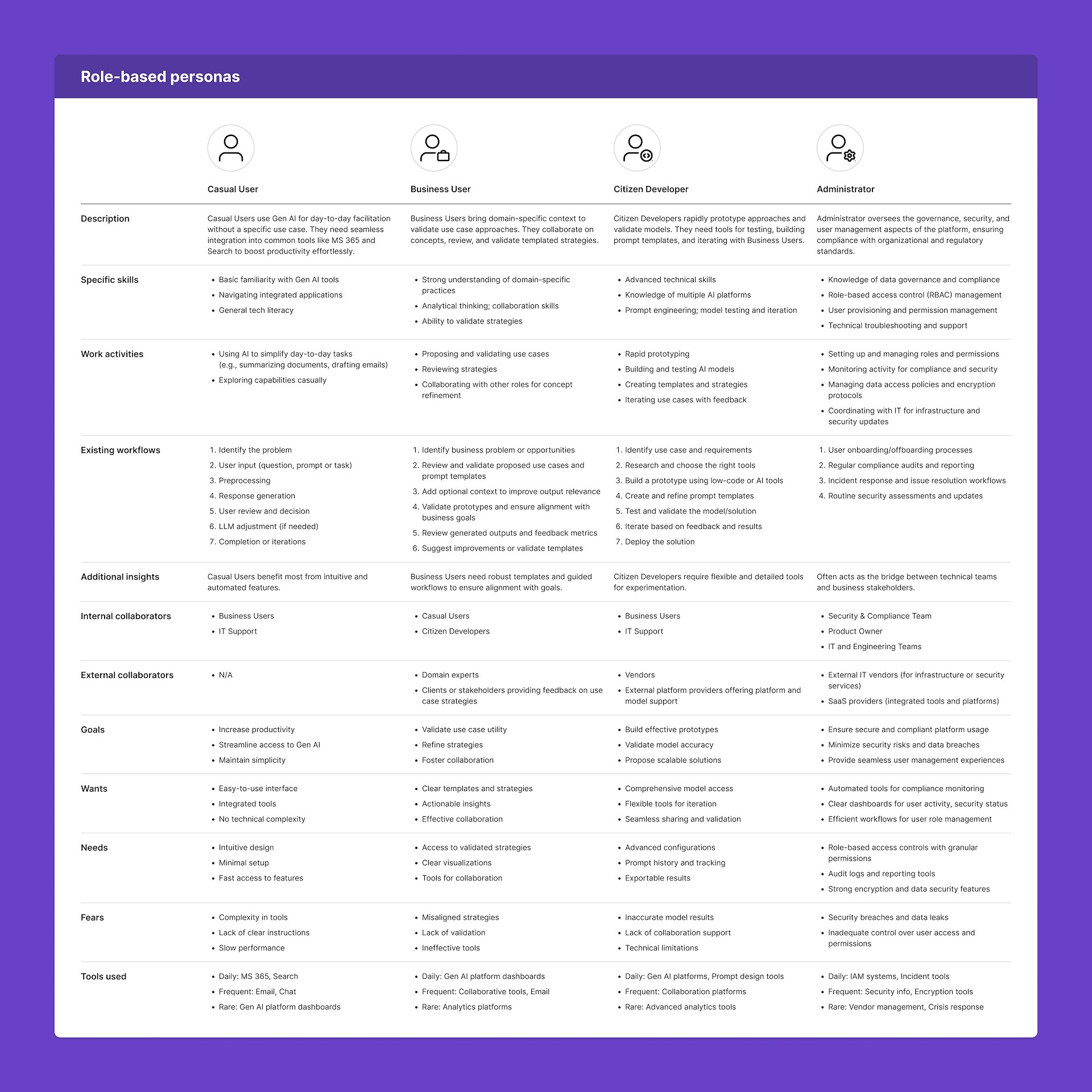

With research in place, I created role-based personas to capture who would use the platform and how. These covered both non-technical users and those with advanced AI knowledge. Each persona focused on tasks, workflows, tools, and collaboration habits, along with goals and concerns. This helped us design for a broader and more realistic set of needs.

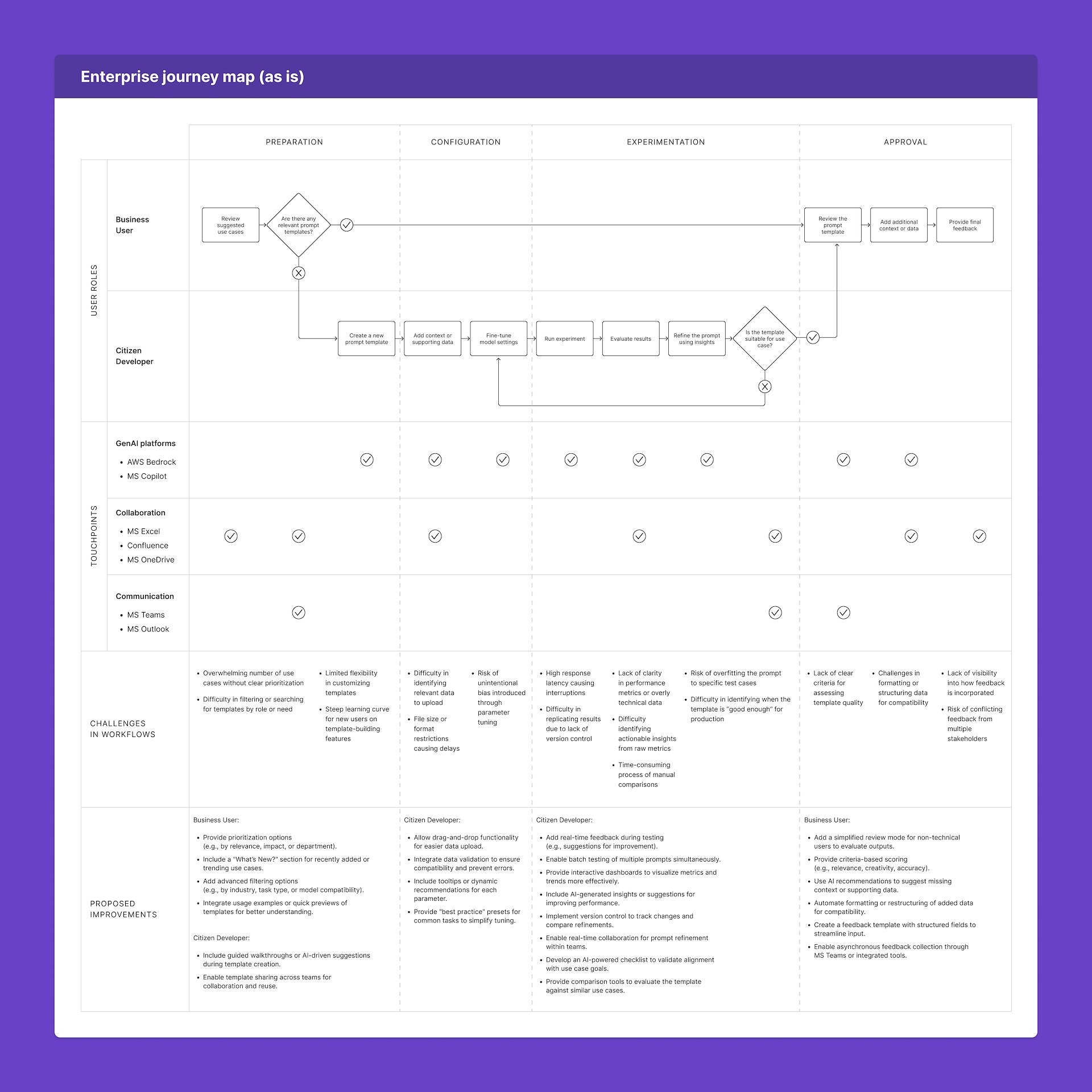

Alongside the user roles, I mapped the user journey to show how people currently worked together in their organizations. The map made it clear where business users and citizen developers faced friction. It also showed opportunities where the platform could make collaboration easier and workflows smoother.

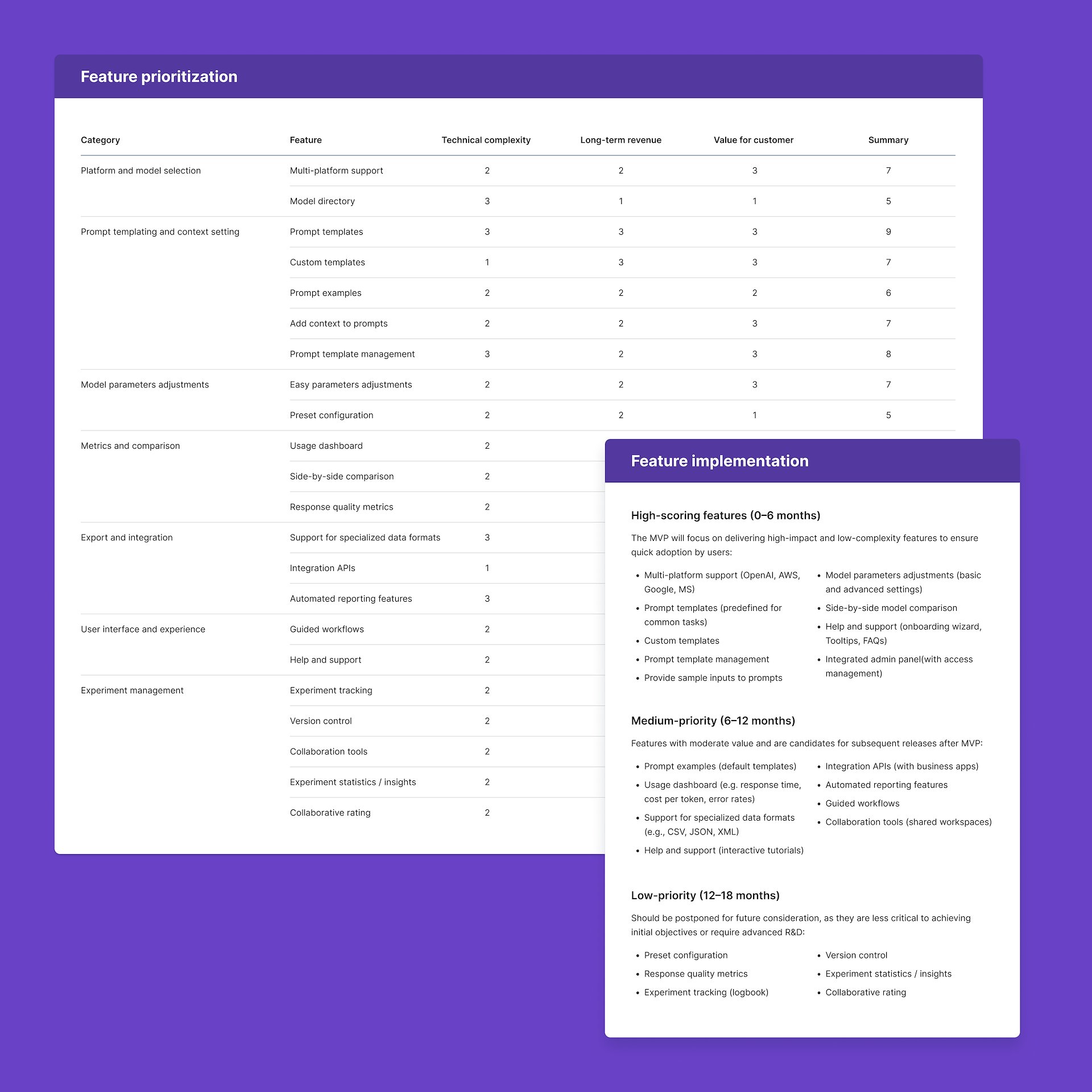

For planning, I built a feature prioritization framework. Features were placed into short, medium, and long-term stages based on impact and feasibility. This helped keep focus on the most important needs first, while giving direction for future growth.

Design

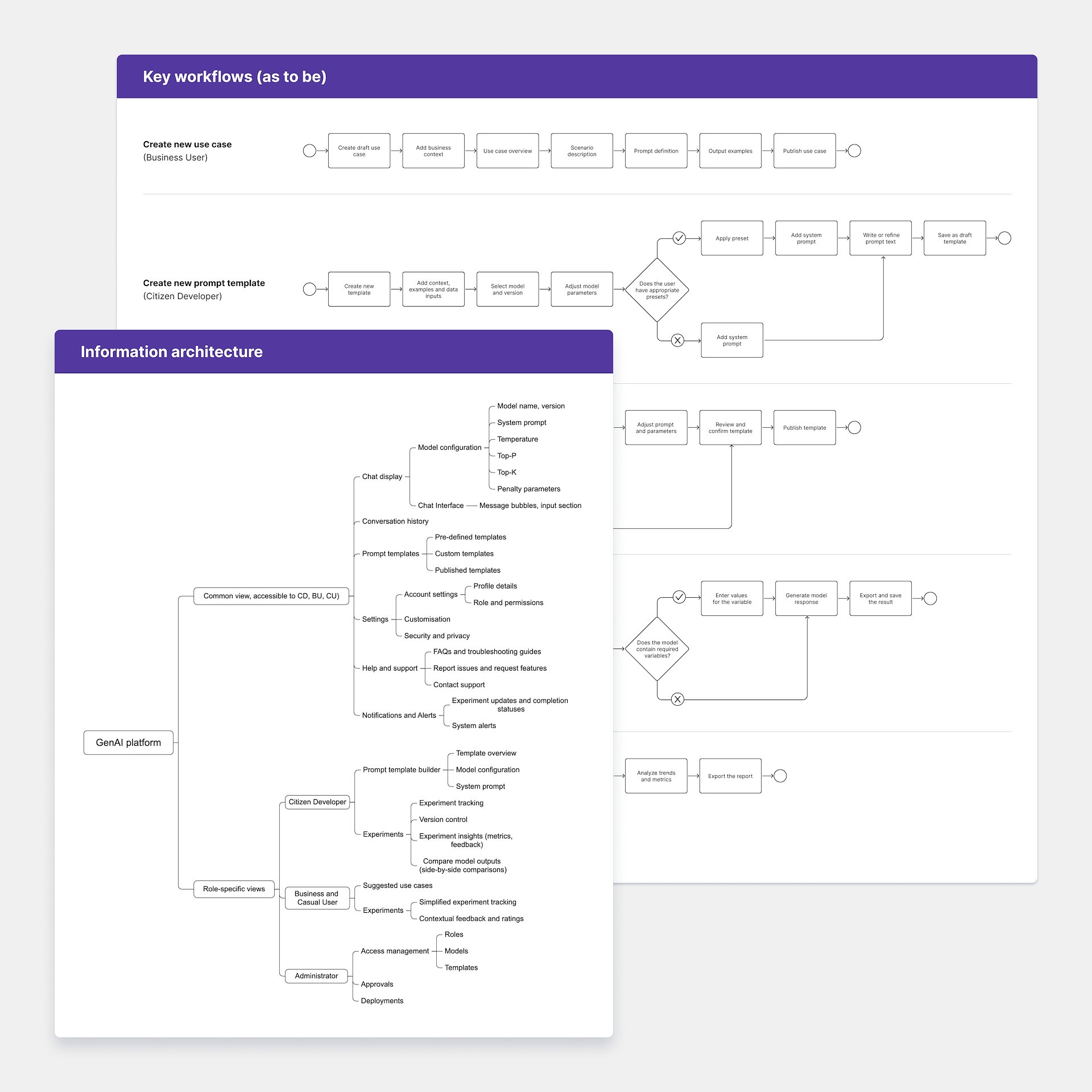

The design phase focused on shaping the product experience. I started with UML diagrams that showed essential user journeys, especially for Business Users and Citizen Developers. These diagrams helped make clear how roles and tasks connected.

Next came the information architecture. It combined shared views for everyone with role-specific sections. This structure reduced complexity and made it easier for people to find what they needed.

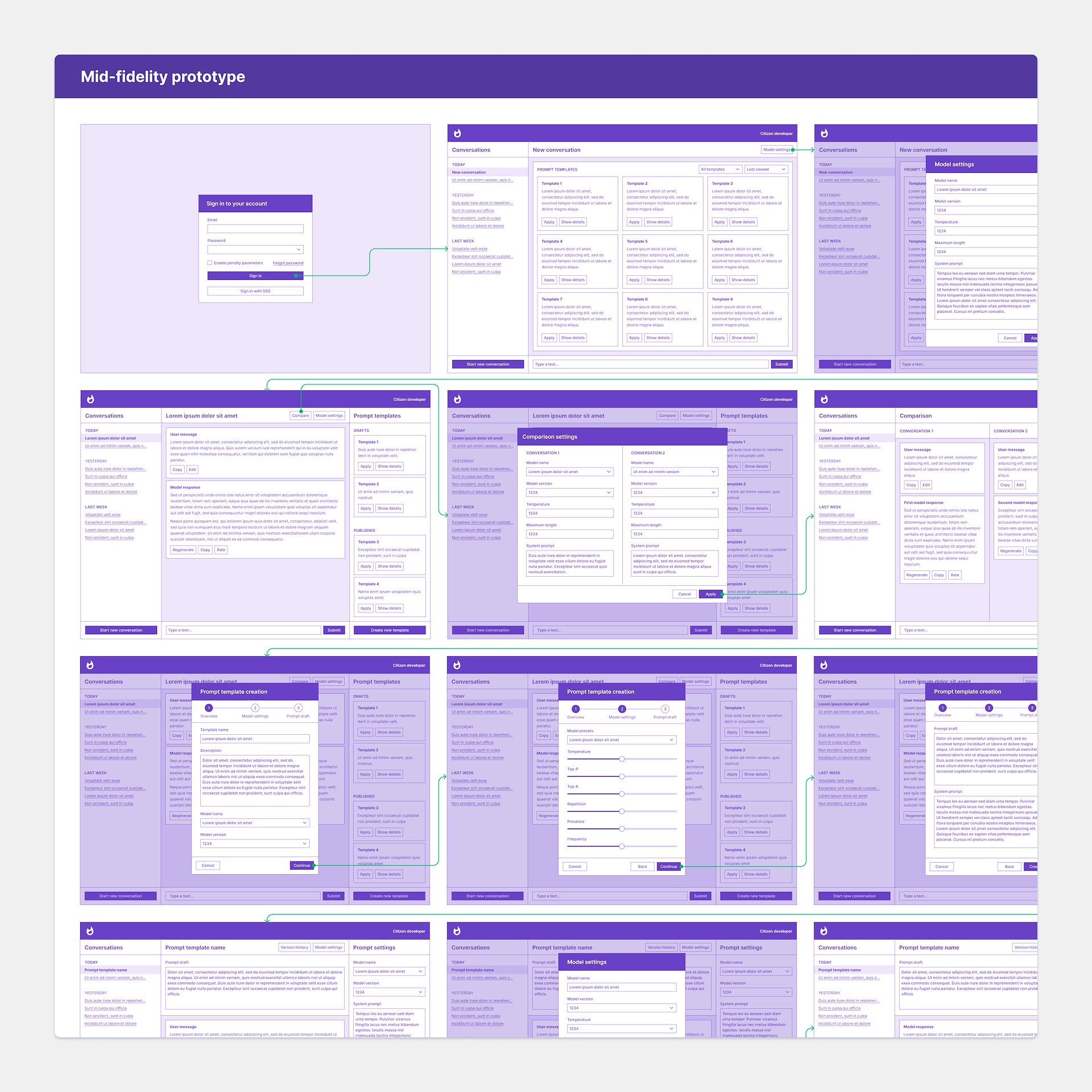

I then created a mid-fidelity prototype to test the structure and flows. It focused on navigation and interactions, not final visuals. The prototype included the core features we had defined earlier, such as reusable templates, model comparisons, and dashboards for each role. This helped visualize how different users would interact with the platform.

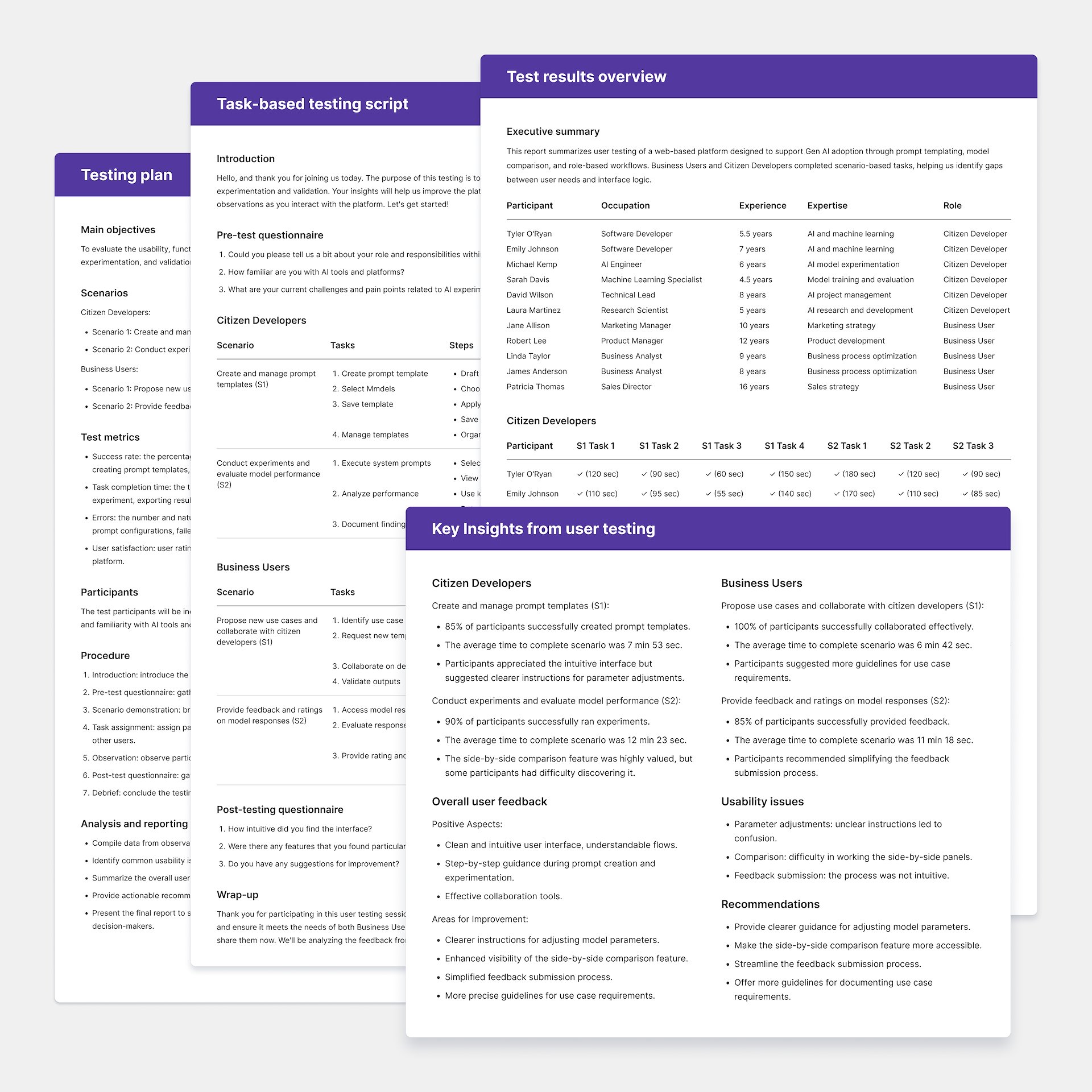

The prototype was used to plan usability testing. I prepared scenarios, tasks, and success measures, along with a script for each session. Participants went through role-specific tasks, and their feedback was documented. The report summarized what worked, what didn’t, and suggestions for improvement.

The prototype was used to plan usability testing. I prepared scenarios, tasks, and success measures, along with a script for each session. Participants went through role-specific tasks, and their feedback was documented. The report summarized what worked, what didn’t, and suggestions for improvement.

Delivery

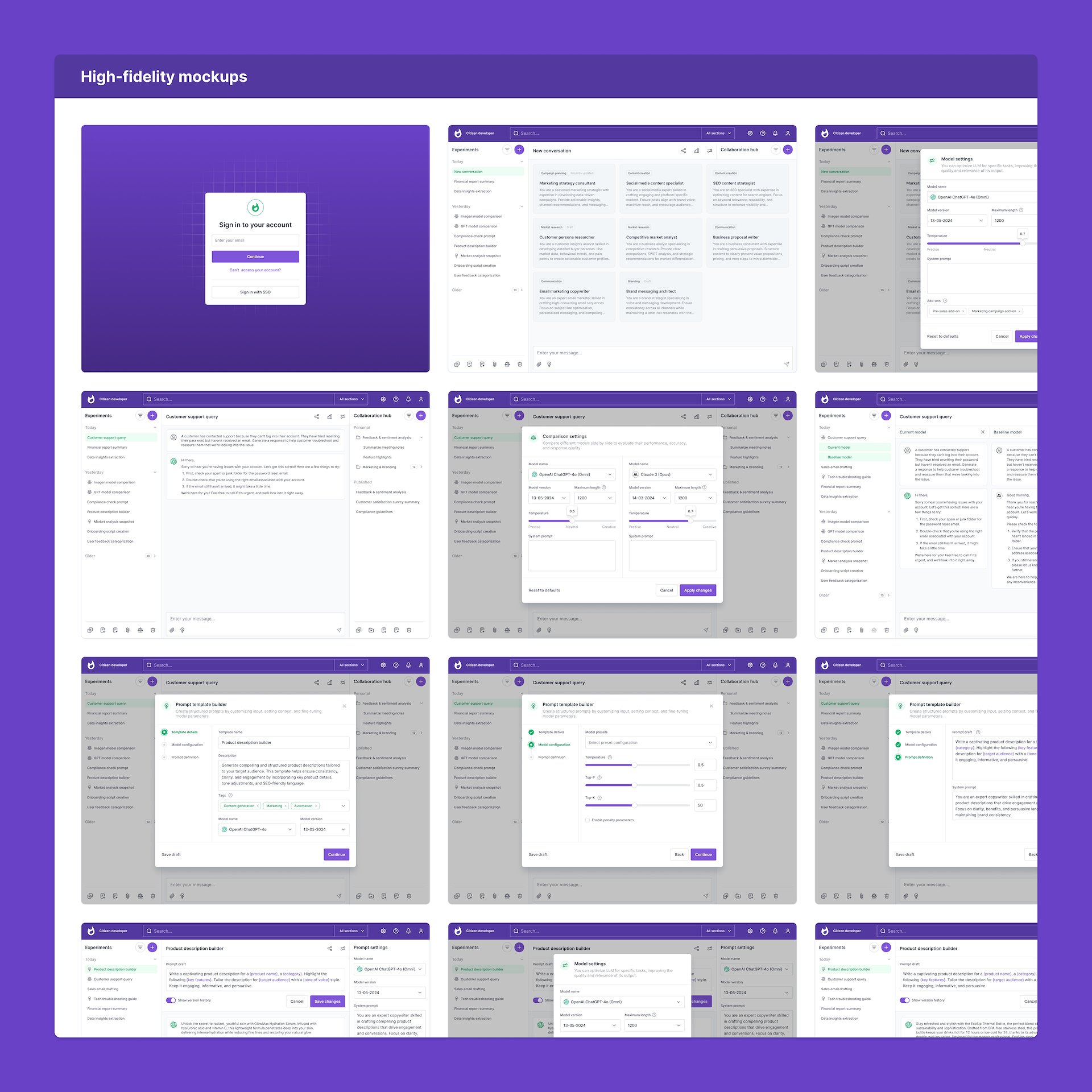

The Delivery stage was about finalizing visuals and handing over to development. High-fidelity mockups were prepared, showing full design and interactions. These became the source for development handoff.

During build, I ran Visual QA cycles. Each round looked for gaps between design and implementation. Issues were tracked and fixed, keeping quality close to the original design.

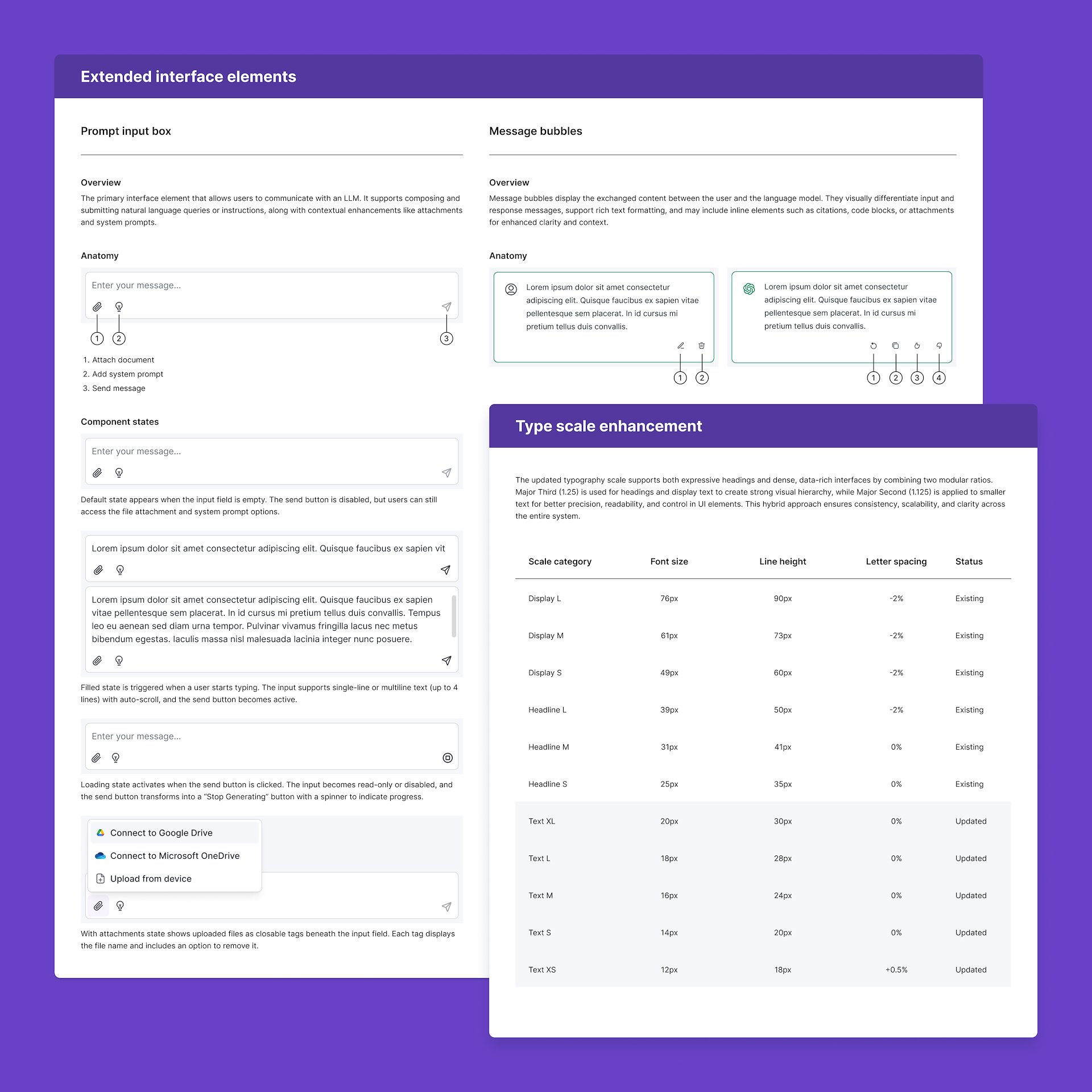

The design system was updated with new styles and components to support the platform. This included colors, type, spacing, and icons, as well as new components like template cards, model comparison screens, and step-by-step flows. Everything was documented with rules and examples to make reuse easier.

Alongside this, I added usage patterns to guide teams in applying components in different contexts. This included building layouts for roles, handling validation states, and supporting collaboration features. The documentation helped scale the system for future work.

Achievements

The MVP launch gave us strong signals of success:

— More than 80% of users across all groups joined in, running over 500 experiments and reusing prompt templates.

— Business and technical roles worked together an average of 15 times a week, showing that role-based workflows and shared assets were effective.

— Over 50 AI use cases were tested and refined with user feedback, leading to a 30% improvement in model performance.

The platform also met all security and compliance needs. There were no incidents during the pilot, which gave confidence in rolling it out further.

These results showed that the platform could meaningfully improve how teams work with Generative AI. They confirmed the original assumptions and gave a clear path for the next iterations.